Friday, November 6, 2009

Agile Metrics

I'm only partway through the video (it's about 2 hrs long) but so far I'm really enjoying and agreeing with what Dave Nicolette had to say about Agile Metrics at Agile 2009. If this is a topic that's of interest to you - especially if you're a Project Manager! - then I recommend you give this video a watch.

Labels:

Metrics,

Project Management

Tuesday, November 3, 2009

Is Agile Just A Placebo?

A good friend of mine is a big fan of Linda Rising, and so anytime Ms. Rising's name pops up, I tend to take notice. This 15-minute video of her speaking about the Placebo Effect is quite interesting and typical of her style.

Saturday, October 31, 2009

The First Retrospective

Last week I spent Thursday morning facilitating a project Retrospective for a local development group who'd never held a Retro before. As part of my engagement, I was also asked to do some "training the trainers" in the sense of working with a couple of people there who were interested in taking on the facilitation role in the future. The thinking on the part of the executive sponsor was that they wanted to bring an expert in for an early Retrospective (or more) but not be reliant on him forever, which is a sentiment that I totally agree with and was happy to support.

Last week I spent Thursday morning facilitating a project Retrospective for a local development group who'd never held a Retro before. As part of my engagement, I was also asked to do some "training the trainers" in the sense of working with a couple of people there who were interested in taking on the facilitation role in the future. The thinking on the part of the executive sponsor was that they wanted to bring an expert in for an early Retrospective (or more) but not be reliant on him forever, which is a sentiment that I totally agree with and was happy to support.The preparation for the session started several days beforehand, as I e-mailed questions to the sponsor and also had a phone conversation with him. I'd sketched out a possible structure for the Retro prior to that, and then the two of us hammered out the final details during that call. One of the most important factors we talked about was duration, which ultimately ended up being 2.5 hours. I would have loved to have scheduled it for longer since it was covering an entire 6- or 7-month project. However, the flip side of the coin was that this was going to be everyone's first Retro, and there was concern that being subjected to a full-day session first time out would be too much, too soon. That led to the decision for a meeting running from 8:30 to 11:00 a.m.

When I got on-site Thursday morning, I met with the future-facilitators for an hour and described what would be happening. The three of us got the room ready by putting up some large sticky notes (a mostly-blank timeline, ground rules and the agenda) and I provided a few thoughts on what I believe the facilitator's role to entail:

- keeping the group focused on events and learning, not on people and blaming

- warming up 'cold' rooms and cooling down 'hot' ones

- nipping any personal attacks in the bud

- avoiding ruts in the proceedings by keeping things moving forward

- making each of the attendees feel comfortable with the process itself

Most of the attendees were slow to open up very much, as you'd expect from people in their first Retrospective. Nothing too contentious came out until more than halfway through, and even then it was offered up fairly tentatively. By the 2.5-hour mark, though, things were getting much more interesting... just as time was up! As one of the trainee facilitators pointed out, it was all we could do to get several of the attendees out of the room even half an hour after it was over! And these were very busy folks, some of whom were missing other commitments in order to stay a little later and chat with us about what they`d just gone through. That sort of thing is really rewarding to see!

The results of the 1-page survey that I handed out at the end were very positive (scores averaged between 8.2 and 9.0 out of 10). In our 1-hour debrief after the session (half of which was chewed up by the hanger-on-ers!), the two trainees did a great job cleaning up the room (while I was waylaid with questions) and then talked about how interesting it had been for them. They were concerned about being able to do what I had done, but I assured them it was easier than it looked! In fact, the toughest part for me was that I was on my feet for nearly the entire two and a half hours, an amount of standing well beyond anything that I've done in the past several months. I'd had the presence of mind to bring a big bottle of water with me so I wouldn't get parched, and yet I didn't think to sit down for more than about 2 minutes over the course of the meeting!

I don't know yet whether or not I'll be invited back for additional work, but this first contract was very enjoyable, indeed. The participants were committed to the process and willing to open up in front of each other and a stranger, and you can't ask much more than that!

Labels:

Learning,

Project Management,

Training

Monday, October 26, 2009

This Looks Like A Job For... AgileMan!

Later this week I'm scheduled to facilitate a Retrospective for a local development group. This will be their first ever Retro, and so they've decided to bring in an outside expert to lead it. I haven't done one in a while, but back in the heyday of my AgileMan stint I was facilitating about one per week. Of course, that was with people who I knew (to some degree or another) and this is with strangers... but I'm hoping it's like riding a bicycle, regardless of who makes the tires!

Later this week I'm scheduled to facilitate a Retrospective for a local development group. This will be their first ever Retro, and so they've decided to bring in an outside expert to lead it. I haven't done one in a while, but back in the heyday of my AgileMan stint I was facilitating about one per week. Of course, that was with people who I knew (to some degree or another) and this is with strangers... but I'm hoping it's like riding a bicycle, regardless of who makes the tires!So in preparing for this engagement, I've been working with the executive sponsor to sort out the logistics for the event (what type of room, duration, number and makeup of attendees, etc) as well as to determine what kind of format might work best. Because it's a Retrospective for a recently-completed project that ran for nearly half a year, we're going to do a Timeline as our main source for gathering data. In an ideal world, a session covering this long a period of time would probably be a full day in length. However, since these folks have never been in one before, we opted for a shorter format (2.5 hours). Because of that, I want to get as much data out on the table as quickly as possible, and a Timeline seemed a good vehicle for that.

Once we've completed that activity and found a few hot spots (via a voting mechanism), then the plan is to use the Force Field Analysis process to uncover what forces have worked for and against whatever it is that we're discussing. From that I hope to be able to lead the team in agreeing on a few Action Items that can be applied on the next project to either weaken some of the opposing forces or strengthen the supporting ones.

It's not the most elaborate agenda I've ever set out for a Retrospective, but I'm trying to remain mindful of the fact that this is something new for this group. I don't want to run the risk of overdoing it on the first step of the journey, and these activities seem appropriate for striking a balance between sophistication and simplification. Of course I won't really know how well I did until I hand out my 1-page Feedback Survey at the end of the session, and then review the results. That's always been my best metric for these jobs, and I've learned a lot from what people given me on them.

And naturally, if you're looking for help with a Retrospective yourself, or any other sort of assistance with an Agile topic, don't hesitate to contact me (AgileMan@sympatico.ca). I'm always on the lookout for jobs for ol' AgileMan (superhero at large)!

Tuesday, September 29, 2009

Good Reads On Pair Programming

Just a quick link to this blog entry from someone who feels very passionately about the benefits of pair programming but doesn't believe that most software organizations could pull it off. He even provides 10 reasons why.

And then for the opposite view, see this, a much shorter article that argues, in rebuttal of the first, that pair programming could be for everyone.

And then for the opposite view, see this, a much shorter article that argues, in rebuttal of the first, that pair programming could be for everyone.

Thursday, September 10, 2009

Getting The Requirements Right

Apparently this amusing blog post was recently making the rounds at my old workplace. In it, the author imagines what designing a new house would be like if architects had to deal with the same sort of loosey-goosey requirements that software developers typically do. One of my favourite lines is "With careful engineering, I believe that you can design this [75-foot swimming pool] into our new house without impacting the final cost." As programmers, we're well familiar with the "keep the design open-ended so that all possible future requests can be done quickly and cheaply" model of thinking.

My wife Vicki is currently finishing up a contract position as a Business Analyst. While the exact particulars of that role differ from place to place, in this case it means that she splits her time between the product folks and the development team. In effect, she's the liaison between the two groups, responsible for taking "business-speak" and translating it into requirements that can be implemented by software types. It's a challenging tightrope act to pull off, because if she drifts too far in either direction she runs the risk of ostracizing the other group and thereby losing their trust and confidence. In her current environment, all of this is done in a fairly traditional (Waterfall) manner, meaning that she's spending inordinate amounts of time getting down - in writing - what the two areas of the company have agreed upon, long before the first line of code is written. And for many years, that was considered to be the optimal way to perform the requirements gathering portion of software projects.

The pitfalls to that approach are well known to anyone familiar with Agile:

- developers tend to focus on fulfilling the stated requirements rather than looking for ways to provide maximum value

- the product owners don't get any opportunities to try out what they've asked for before it's locked down, thereby missing the chance to make it better

- all features get started more or less at once, and any deliverables that end up being abandoned late in the project (when things inevitably run long) have usually had considerable work done on them, all of which was wasted effort that extended the project timeline unnecessarily

- there are all kinds of openings for aspects of the requirements to be "lost in translation" between the Product Manager and Business Analyst, or between the B.A. and the Programmers

- the development team is often kept at arm's length from the product team, reinforcing the "us vs them" mentality and denying both groups the opportunity to put their heads together and design something better than whatever each could come up with on their own

When you consider all of the obstacles standing in the way of success in a scenario like this, it's sometimes mind-boggling that any traditional software projects ever succeed (about 1/4 to 1/3 do, last I checked). All you need is a Product Manager like the one being parodied in the link above, or a Business Analyst who's weak in business-speak or requirements-speak (or both), or a Developer who isn't all that good at adhering to a written specification, and you end up with a delivery of functionality that isn't going to make anyone happy... assuming it ever emerges out of the Quality Assurance phase at all, after dozens or hundreds of last minute changes have been made to it once people actually got their hands on it outside the development team.

While an iterative approach might not work all that well when designing and building a home, it has a lot of advantages when it comes to software. For example, it's a rare product person who wouldn't relish the opportunity to touch and feel a new set of features early enough in the proceedings to allow for alterations. The trick, of course, is to get such a person's head around the notion that you'll be delivering their requirements in bits and pieces (in priority order, assuming that you can get them to sit down and actually prioritize their wish list!). Some people in that role have become so accustomed to an "all or nothing" setup that showing them one subset of finished functionality incorrectly conveys the impression that it's all done! I've also heard product representatives claim that they didn't have time to review features as they came out, which of course raises the question: what in your job description could possibly be more important than ensuring that the best possible features show up in your product(s)? That kind of thinking just seems nutty to me, but I'm sure they must have their reasons.

For the last big programming job I had, I was lucky enough to have the perfect business person. The project was the creation of our Meal Planner Application, which I wrote in our basement over the 2004 Christmas holidays. And Vicki was my "customer." She had a good idea what she wanted by way of functionality, and I just kept showing her what I thought she'd asked for, until we got it right. If she'd been forced to write out in full what she imagined she'd need, before I ever started coding, I doubt that we'd ever have gotten that thing off the ground. But instead we did it iteratively (long before I'd ever heard of Agile) and ended up with a product that's still being used today, almost 5 years later. It's really too bad more organizations can't see the value in working that way.

Thursday, August 27, 2009

What Motivates Creative Thinking?

A friend and former co-worker of mine pointed me in the direction of this video of Dan Pink speaking at this year's TED (Technology, Entertainment, Design) Talks, in which Mr Pink (not to be confused with the character from Reservoir Dogs!) provides some evidence-based thoughts on motivating creative thinking. I encourage everyone to go check it out.

After watching it, I started thinking about my own experiences in the software industry. Pink's Autonomy-Mastery-Purpose summation certainly matches what I've seen in the best of scenarios. And, of course, those three characteristics mesh perfectly with the principles of self-organization, continuous learning and prioritized backlog that Agile are built upon.

From what I've seen, it's definitely no easy feat to create and sustain a culture in which all three of them are:

- valued in word,

- valued in deed,

- committed to at all levels of the organization, and

- not sacrificed in the name of "expedience" or "meeting a schedule".

I don't believe that those who fall down in the attempt, however, realize just how much they're losing by not trying harder. For one thing, you only get so many chances to introduce that kind of culture, after which you lose your credibility for a long time to come. Even when we were introducing Agile to our company in 2006, I met considerable resistance from folks who'd been there long enough to remember previous attempts at reorganization and empowerment, and were thus doubly skeptical of the results. There's also the flawed "we can't afford the schedule hit" aspect to failure in this area. One executive I came into contact with was always saying, "We'd love to build that kind of culture but there just isn't the time." Ironically, of course, whatever deadline was being worked feverishly toward would be missed (and missed and missed) anyway because of all the problems being ignored, meaning that the much-needed improvements were put off for no reason in the end. It becomes a vicious circle that's awfully hard to break out of.

The most creative work I've seen has always come from those who are given the latitude to wander down many paths, the tools and training that they themselves believe they need, and a general - rather than specific - direction to follow (so, "what", but not "how"). In some companies, I suppose, that sort of revolutionary work may not even be necessary or desired. But for the rest of the IT world, leaders do themselves and their organization a terrible disservice whenever they believe, as Dan Pink illustrates so capably, that they can simply dangle a reward in front of their heavily-supervised employees' eyes at random points in time and achieve greatness. When you do that, you end up with the most unimaginative of solutions, like a candle stuck to a wall with pushpins, dripping wax down onto your table.

Labels:

Incentives,

Leadership,

Learning

Wednesday, August 12, 2009

The Relationship Between Trust And Leadership

An article I was reading this morning got me thinking about how the quality of leadership within an organization relates to the level of trust that you'll find there. I'm not sure that it's a perfect model that I've come up with, but it seems to fit most of the scenarios that I've observed in my travels.

An article I was reading this morning got me thinking about how the quality of leadership within an organization relates to the level of trust that you'll find there. I'm not sure that it's a perfect model that I've come up with, but it seems to fit most of the scenarios that I've observed in my travels.Basically, the relationship seems to be quite simple: the better the leadership, the more trust exists. This is one of those situations where you can perceive the effect (trusting and trustworthy players vs distrust between parties) in direct proportion to the causal factor (effective vs dysfunctional leadership), but it also can work in the opposite direction at times. I dedicated an entire chapter to this in my second AgileMan book (Issue # 46: Who Do You Trust?) but I think that I was still too close to the proceedings at that point to really see just how tightly tied together the two things were.

For example, a leader may start off willing to extend a great deal of trust out to his or her employees, only to feel "betrayed" by them if the same good faith isn't extended back. Imagine a team promising to complete some vital feature, giving "thumbs up" reports on it throughout the Iteration, and then falling well short of the goal in the end. That kind of result can cause a well-intentioned leader to change gears and begin compromising principles: becoming more of a micromanager, reducing the amount of freedom allowed to the team, or just generally dictating more of "how" on future work. In one way, I suppose, we could still characterize that unhappy outcome as being the result of poor leadership. After all, in an Agile environment at least, we expect leadership to emerge within teams, as well. So in my sample scenario, the team that "green-shifted" their situation right up until the end failed to demonstrate the type of maturity, honesty and transparency that we'd expect from a well-performing Agile group.

Just as likely, though, it's the manager or executive who failed to fulfill his or her responsibilities that leads to the breakdown. I certainly saw numerous instances of that in my role as Agile Manager, and in each case the result was a weakening of the bonds of trust between the organizational layers of the corporate pyramid. If a Product Manager promises to be available but isn't; if an executive fails to provide a vision of where the company is going; if team members are blamed for dropped balls that were actually someone else's responsibility... each of these qualities of poor leadership eat away at the foundation of trust within the organization. And that's not just a bad thing on paper, either.

Among the many ways in which a lack of trust hurts an organization, I saw:

- time wasted as people (in both groups) sat around and bitched about how they couldn't trust the other group any longer

- wasteful defensive measures planned out and enacted as ways to guard against the possibility of being let down or thrown under the bus by the other group

- the baggage of past betrayals brought up, again and again, in almost every new interaction, making it nearly impossible to ever achieve a fresh start

- a complete reversal of the empowerment model that Agile depends on, as more and more of the conversations would devolve into "contract negotiation" as each side would want detailed recordings of exactly what was discussed in order to fend off future allegations of failure

- a very unhealthy "us vs them" attitude that benefited no one and drove morale to continually lower levels everywhere

What that tells us, of course, is that choosing your leaders in an Agile environment is all the more important, and not something that should necessarily be done by the same old rules of the past. If you make that mistake, chances are you'll be dealing with trust issues for years to come.

Labels:

Leadership,

Learning,

Trust

Wednesday, July 22, 2009

Why Transparency Matters

Sometimes it astounds me just how much transparency exists in the world today, and how much benefit we derive from it. In some cases, it's the incredible access to information that we have, mostly thanks to the Internet, while in others, it's simply how willing people are to share what they're doing.

Sometimes it astounds me just how much transparency exists in the world today, and how much benefit we derive from it. In some cases, it's the incredible access to information that we have, mostly thanks to the Internet, while in others, it's simply how willing people are to share what they're doing.As examples of the former, I started making a mental this morning of some of the data that's now almost literally "at my fingertips" that would have, even a decade or so ago, been at least a phone call or trip somewhere away:

- animated radar images of the current precipitation patterns in my locale, so that I can plan my biking forays accordingly

- real-time, up-to-the-minute sports scores, including details like who's pitching, who assisted on what goals, and how many shots have been taken

- specifications for devices like pool heaters and DVD players that you may have long since lost the paper copies of

- forms, as well as forums, related to topics like Canadian Income Tax, immigration policy and travel destinations, just to name a few

- dictionary and encyclopedia content enabling anyone to write a factual, typo-free essay (or blog post) without having to even get up out of their chair

- transit system schedules so that you know exactly when to be where to catch what (bus/train/plane)

- and thousands and thousands more

So what does any of this have to do with Agile, or software development in general? Well, I think that we're realizing many of the benefits of transparency in the 21st century (as touched on above), but that doesn't necessarily always get applied to how we run our development projects. In an Agile culture, we strive for transparency in some very big ways - Burndown Charts, strictly-prioritized Product Backlogs, Retrospectives - all of which are important and positive. If you compare that to a more traditional (Waterfall-style) software project, though, you can see that the same degree of transparency is seldom achieved there. Team members may seek to hide some of what they're doing out of fear that the work won't be approved if the bosses find out about it. Project coordinators may talk about, or even hope for some sort of post-mortem to be held after the product finally ships, but rarely do those mythical meetings actually happen. Status reports all too often end up being "green-shifted" by those responsible for providing the data, in order to keep the alarm bells from sounding early on. These are all instances where the lack of transparency hurts the success of the project.

Even in an Agile environment, however, there can still sometimes be a resistance to openness. I certainly encountered many situations in my two years as Agile Manager that spoke to this point. Whether it be shutting down conversations or blogs that are bringing to light unsavoury failings among management, or a reluctance to share with others the challenges that are being encountered (because it might be used against you later), it's no easy task to adopt a culture of transparency. Politics can get in the way, as can pride, fear and an exaggerated sense of self-preservation. None of those are trivial obstacles to overcome.

Unfortunately, in virtually every case this tendency toward hiding facts ends up doing more harm than good. Decisions end up being made based on faulty data, morale problems develop thanks to a cloak of secrecy that seems to always inspire conspiracy theories much worse than the reality of the situation, and people waste time going through hoops trying to find "the person with the answer." When I think about how efficiently I can use my time today, thanks to the wealth of information available to me, compared to what life was like when I first started working... it's like night and day. And that's because, given quick access to the necessary data, it's always easier to make a reasonable, informed decision. That perspective sometimes gets lost in the heat of the office battles, though. Those of us in the Information Technology industry need to remember that, as we go about our day-to-day business of cranking out top-notch software products, information is king. And the king belongs to everyone.

So if you're reading this from your desk at work right now, ask yourself: what bit of data are you holding on to right now, that would better serve the company if it were shared? Don't betray any confidences (personal or professional), but rather look for something that you've simply been lax in getting out there, or that you know would help someone else if you made the effort to tell them about it. Sometimes that's all it takes.

Tuesday, July 14, 2009

Learning May Actually Help Keep Us Young

I'm reading a book called The Brain That Changes Itself, by Norman Doidge, M.D. In it, the author describes many different examples of the human brain's neuroplasticity, which is to say: its ability to change itself based on various stimuli. For decades, the belief was that each part of the brain had a certain, unchangeable function to perform. This implied that an injury to any part of the brain meant the loss of that type of functionality, forever. More recently, though, neurologists have begun to uncover many examples where the organ inside our skulls is actually much more adaptive than that rigid model would allow for.

One of the topics that Dr Doidge covers which certainly applies to Agile is the importance of continuing to learn, even after completing the scholastic period of our lives. In studies that have been done with those in their sixties and beyond, there's clear evidence that elderly individuals who seek out ways to engage their mind - such as by learning a new language or dance, taking on a new hobby or even just visiting locales that they've not been to before - are less likely to suffer from dementia and other symptoms typically related to "the aging of the brain." New neuro-connections continue to form as we get older, but only if we exercise our mental facilities during that latter stage of life. As Dr Doidge points out, it's still possible that it may be a correlation rather than causation relationship, or even causal but in the other direction. In other words, those who are actively keeping their minds fine-tuned may only be able to do so exactly because they aren't losing their edge (rather than the activities being responsible for the sharp minds). But research is making that seem less and less likely, and so I'm inclined to believe that there is more of a positive cause and effect relationship at work in those scenarios, and certainly the rest of the book is a tour of amazing brain-alterations that have been observed by researchers.

So if we accept - optimistically, perhaps! - that learning really does help to fight off some of the effects of aging (such as senility and dementia), then how do we adopt a way of life that promotes learning? In my capacity as Agile Manager between 2006 and 2008, I encountered a lot of individuals who truly epitomized the answer to that question. For them, the focus was always on discovering new ways to make their product better. Often, the people around them (especially in management) were fixated on "hitting a date", which often translated to "cutting corners." The friction caused by the intersection of those two very different approaches was probably healthy, in the final analysis, although it rarely felt that way at the time.

In life, though, we're less likely to constantly run into schedule pressures in quite the same way as we do in our vocation as software craftspeople. Granted, there may never seem to be enough time in the day, but we still get to decide - within reasonable bounds, set by family obligations - just how to spend our spare time. Do we "veg out" and turn our brain off every chance we get, or do we look for ways to stimulate our mind and imagination? Are we always satisfied with the way things are, or do we look for paths to improvement? Have we locked ourselves into a comfortable pattern, or are we still growing in ways not related to our waistlines?

I obviously have a lot more free time now than I did just a year ago. Even so, I've made a conscious effort to spend significant portions of it reading, writing and generally trying to bring my overall understanding of the world around me to a higher level. I'm also finding that my "second career" as a Math tutor is requiring no end of learning on my part (how's that for irony?) as I discover that every child really is different, and therefore no one approach works for every situation.

Will any of that do any good? Am I still destined to end up senile (or, as AgileBoy would put it, "more senile")? I don't have any answers, but I think it's important that we all all keep asking questions.

Thursday, July 2, 2009

How Can Productivity Really Be Increased?

As our current Recession continues to wreak havoc across the employment ranks, one topic that we'll all surely hear more of is "increasing productivity." It's the conjoined twin of "reducing costs," after all, which has already proven to be a popular refrain since last Fall. Once a company gets to the point where it doesn't believe that it can effectively reduce the workforce any further, the next logical step is to look for ways to get more out of whatever employee base remains. I suspect that that's already happening; if it isn't, then it probably soon will be. (This, too, shall pass... but maybe not anytime soon.)

I want to say right off the top: increasing productivity by making people work longer hours isn't the answer. It may work in the very short term, but it certainly doesn't over the long haul. All kinds of studies have proven this, to the point where I'm not even going to bother citing them here (this stance against sustained overtime should be accepted by now, I hope).

So how do people become more productive? Since this is an Agile blog, I'm talking here about the so-called "knowledge worker" industry, rather than something more manual-based, where productivity improvements often take the form of automation. It's much harder to replace a Computer Programmer, Project Manager or Business Analyst with a machine or a piece of software, so just how are productivity boons realized?

The answer that was already floating around when I first hit the industry back in the mid-80s was, "Work smarter." Now, it was also the case that not too many people had any notion of specific ways in which to accomplish that, but it was still a worthwhile goal. What I learned, over time, was that working smarter required a certain amount of reflection and adaptation, both of which fit quite nicely with the principles of Agile. Specifically, you could increase productivity if you were willing to root out, and then call out, parts of your process that were inefficient. The Toyota Lean Manufacturing approach essentially crystalized this mindset and represents the most pure application of it that I've yet encountered. It's one of those things that's easier said than done, though, and it requires a superior style of leadership that's quite frankly lacking in most organizations.

But even if you work in an environment that's not based around Lean, I think that you can still apply some of the same principles. Some of your success will be limited by how much empowerment exists, since making change in an empowerment vacuum is, if not impossible, at least ridiculously difficult. Therefore assessing the latitude with which you can effect change is probably the first step to take.

Assuming that you really do want to become more productive and you have at least some wiggle room within which to operate, then the next thing to do is look for obvious inefficiencies in your current processes. In Lean, I believe these are referred to as "waste." One way to spot these opportunities involves doing some sort of workflow analysis, paying particular attention to items that either introduce delays, or which seem to have limited value. There's more to it than that, but generally speaking you're taking a critical look at "how stuff gets done" and trying to identify those areas where the effort required isn't exceeded by the reward delivered.

As an example, imagine a process in which one step requires a meeting between representatives of several different departments, during which the various attendees provide feedback, and ultimately approval, on Business Requirements. It might be the case that setting up such a meeting often introduces a delay of up to several weeks, due to the lack of availability of the players. Therefore, you might actually be able to shave a project's timeline considerably if you could find a way to gather that same feedback and approval without requiring everyone to physically attend a meeting. Perhaps it could be done electronically, or in two or more smaller groups... regardless, the goal would be to see if it was possible to accomplish the same outcome without incurring the large elapsed-time cost.

In my capacity as Agile Manager, I saw something like this done with Code Reviews. The acquisition of a product called Code Collaborator enabled two improvements to occur: first, a dramatic increase in the amount of peer reviewing of code changes (thus, improving quality of the delivered product); and second, allowing developers and testers to review code at their desks, during breaks between other activities. The win-win nature of this change, whereby both the product's quality and the impact on the schedule were positively affected, made it an outstanding success story.

One of the biggest challenges to making changes toward improved productivity, of course, is that it involves change. As anyone who's ever moved to Agile knows all too well, change is hard. Some people resist it, and many of those individuals are quite adept at throwing up obstacles to any change that they feel threatened by. The introduction of Automated Tests, for example, may represent a huge peril to anyone who's built a career around manual testing. The trick, of course, is in helping those people see what sorts of operations Automated Testing is appropriate for, as well as re-purposing the human brains that used to do that work to something that can't be automated. It's not easy, but it can be done... and the end result is improved productivity.

Other types of change that may meet resistance involve switching up how others do their job. Even something as seemingly trivial as moving from a big meeting room setting to doing something electronically may cause some folks to recoil in disgust. They may actually like attending meetings, for one thing. But beyond that, they may have legitimate concerns about how effectively a set of requirements can be reviewed without the back-and-forth discussions that they're used to in those meeting rooms. How you handle those objections will go a long way toward determining how far you get toward your goal of productivity increases. As someone well acquainted with "inspect and adapt" these days, I recommend that you set up any alteration as an experiment, and then monitor the results so that everyone can see whether it's working out or not.

I think a basic question to be prepared to ask at every step is, "What value are we getting from this part of the process?" That can be an unpopular query to pose, especially when you get to the part where, for example, Bob's entire raison d'etre is called into question. You can bet your bottom dollar that Bob will have a handful of justifications at the ready for why filling out that form in triplicate (two of which get shredded) is a key component to the checks and balances of the entire company. Being able to handle situations like that require more tact than I could ever advise on, so don't be afraid to seek out your best diplomat in management if you find yourself down that path.

The bottom line, I guess, is that you can make productivity gains, if you put your mind to it. It just requires an analytical approach and a willingness to change. One of the alternatives, of course, is that all of your jobs get outsourced to a country where the knowledge industry works at less than half your rate... and nobody wants that, I'm sure!

Labels:

Change,

Leadership,

Learning

Wednesday, June 17, 2009

The Software Development Cycle

With my recent contract now complete, I thought I'd post a few non-specific details about what I did during it. While it's not completely germane to Agile, you'll see a few places where there was some overlap, at least.

With my recent contract now complete, I thought I'd post a few non-specific details about what I did during it. While it's not completely germane to Agile, you'll see a few places where there was some overlap, at least.There were basically three parts to complete as part of the contract:

- review the organization's current software development process

- compare that picture to what a more standard development process would look like

- provide recommendations on changes or enhancements that could be implemented to make things even better

In order to get a clear idea of how development happened in this company, I read over a fair number of documents that related either to standards, or to recent projects, within the organization. From that exercise, along with a few strategically-timed interviews with personnel there, I was able to figure out what type of artifacts were produced, as well as which practices were followed. I'd describe what I saw as a fairly lightweight model, which is what I'd expected to find, given the age (fairly young), size (6 to 8 developers) and position within the company (an Information Technology group within an industry that's far removed from being an IT provider). In some areas, it was really only rigour that was missing; in others, there was simply a lack of experience with "best practices for large-scale software development", let's say. And that's the sort of thing you bring a consultant in for, anyway.

For the comparison aspect, my boss suggested a Gap Analysis in the form of a spreadsheet, which I thought was quite appropriate. The U.S. arm of the company had previously brought in-house a very comprehensive System Development Life Cycle model for the American IT groups (which are considerably larger than the Canadian one located here), and I was happy to use that as my baseline for the comparison. This model was very thorough (and very gated, as befits a Waterfall approach) and so it provided an excellent "ideal" for me to measure their setup against. There were roughly 150 artifacts or activities included in it, and so I was able to evaluate my clients' methodology in terms of which of those items they included, and (in a few cases, at least) how their versions measured up to the baseline.

Once the Gap Analysis was complete, I identified what I believed to be the highest priority gaps. This ended up being just over 20 items. For each, I then wrote up a Recommendation, in the following format:

Recommendation: xxx

Benefits: xxx

Approach: xxx

Effectiveness Metrics: xxx

In other words, I provided the what, the why, the how, and some notion of how to measure the results to make sure that they weren't wasting their time making the change.

That Gap Analysis spreadsheet, which included the 20-some Recommendations, was the primary deliverable from my contract. However, in talks with my boss we decided that a couple other concrete artifacts would be desirable to come out of this, as well. The first was a Microsoft Project Plan that was essentially a skeleton Plan that included all of the steps from the "ideal" SDLC model, customized somewhat to their environment, in order to make it easier for all of the steps to be remembered in the future. Also, because I considered a thorough and comprehensive Release Plan to be a prime example of where some additional rigour would help them out, I provided a template for that, too.

Those three documents were reviewed with most of the development staff on my last day there, and were extremely well-received. I had tried to incorporate as much feedback from the staff as I could in the content of my deliverables, and I think they appreciated that. One example was the inclusion of a recommendation that features be planned in a way that allowed them to be completed more serially than they had in the past, so as to allow at least some testing of some of the features before being passed on to the business area (who, in that environment, act as "QA"). That, and some of my unit testing suggestions, definitely benefited from my two years as Agile Manager!

All in all, it was a good learning opportunity for me, and I think that the clients received good value from my 22 years of IT experience. I think that if the timing had been different, such that the people there who needed to play a bigger part had had more time available, then it could have gone better and more immediate gains might have been realized. But as the poker enthusiasts say: you have to play the hand you're dealt, and that's what I did.

Labels:

Learning,

Metrics,

Project Management

Sunday, June 7, 2009

Separating The How From The What

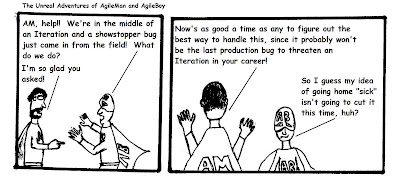

Poor AgileBoy!

Poor AgileBoy!One of the most common forms of "oppression" that I used to hear about in my role as Agile Manager involved Product people (or other representatives of management) going well beyond their mandate of providing "the "what" as they ventured into the thorny territory of "the how". I doubt that it was ever intended maliciously, no matter how much conspiratorial spice was attributed to it by the development team members... but if that's true, then why did it happen?

First, though, I suppose I should explain what I'm talking about. In most Agile methodologies, there's a principle that says that the Product Owner indicates what the team should work on - in the form of a prioritized Product Backlog - and the team itself figures out how best to deliver it. Thus, "the what" is the province of Product and "the how" is up to the team. It seems like a pretty sensible, straightforward arrangement, and yet it often goes off the rails. Why?

One cause for a blurring between the two can be Product people with technical backgrounds. We certainly had that in spades with our (aptly named) Technical Product Owners, most of whom were former "star coders" who had moved up through the programming ranks into management earlier in their careers. I'm sure it must have been very difficult for some of them to limit themselves to "the what" when they no doubt had all kinds of ideas - good or bad - about what form "the how" should take. In the most extreme cases, I suspect that there were times when our TPOs actually did have better ideas on how to implement certain features than the more junior team members did... but dictating "the how" still wasn't their job. In that sort of setup (where the Product area is made up of technical gurus), what would have been preferable would have been an arrangement where those TPOs could have coached the team members on design principles and architectural considerations in general, as part of the technical aspect of their title. That healthier response would have still accomplished what I assume was their goal - leading the team to better software choices - without overstepping their bounds and causing a schism between product and development.

Another factor that can result in people not responsible for "the how" trying to exert their influence on those who are, is a simple lack of trust. Again, I saw this in action many times as the Agile Manager. I'm not going to paint a black and white picture and pretend that it was always warranted, nor that it was never warranted. But the important point here is that dictating "the how" downward in that manner usually did more harm than good, and it certainly did nothing to close the trust gap. For one thing, having "the how" taken out of their hands gave those developers who were already skeptical about management's support of Agile more ammunition with which to say, "See? It's all just lip service! We're not really being empowered here!" It was also true, in some cases at least, that "the how" being supplied wasn't particularly well suited to the new development environment that was emerging. What with all of its automated tests, refactoring and focus on peer reviews, the code was resembling what "the old guard" remembered less and less with each passing Iteration. I experienced this first hand, as I'd make some small suggestion about how to tackle something that I thought might be helpful, only to get a condescending look and "Uh, it actually doesn't work like that anymore" from someone half my age. Yeah, that's tough on the ego, but it's also completely the right response.

As for why the trust gap was there in the first place, well... as both of my AgileMan books lay out in gory detail, lots of mistakes and missteps were being made. It wasn't exactly smooth sailing, let's say. The result of that, however, was that some among management apparently felt that the teams couldn't be trusted, and so they attempted to exert more and more control over them, in whatever ways they could. Had they simply applied that energy to improving the requirements gathering techniques being used within our organization, though, it would've benefited us more. Providing clear sets of Acceptance Criteria and good, well thought out constraints would certainly have done more to improve the product features that our teams were developing than any amount of "meddling" in the mechanics of the software development process itself ever did.

I imagine that it's often difficult to keep "the how" separated from "the what" as you work in an Agile environment, but it's critical that you do. After all, the people doing the work are in the best position to know how to do it, and that principle has helped make Toyota one of the premiere auto manufacturers in the world. We could do a lot worse than to follow their example in the Information Technology business!

Labels:

Change,

Leadership,

Learning,

Trust

Saturday, May 23, 2009

Agile Is In My Blood

It's striking to me how different my perspective is these days, as a result of my time as the Agile Manager at my previous job. I'm currently working in an environment that's not in the least Agile, and yet I still seem to perceive what's going on around me with Agile-Vision, as it were.

It's striking to me how different my perspective is these days, as a result of my time as the Agile Manager at my previous job. I'm currently working in an environment that's not in the least Agile, and yet I still seem to perceive what's going on around me with Agile-Vision, as it were.For example, I've spotted an instance where the separation between the business people and the development team has caused some issues, and so it seems fairly clear to me that that gap needs to be bridged somehow. It seems to me that there are lots of options available to be considered, even outside of an Agile methodology.

I can also see where delivering what's promising to be a fairly large feature set for the current project in smaller chunks will almost certainly help, even if the "delivery" here simply means working on the features in such a way as to allow the business folks to play around with them as they "come off the line". This won't be the Agile practice of working within short Iterations and having each small group of features "done done done", but at least it'll open up the possibility of getting feedback on many of the pieces before the development cycle is complete.

And finally, I've begun tossing around the idea of using something akin to a Retrospective for areas where the general consensus is that not enough is being learned or improved upon. That may apply to relationships between groups, or to preventing the same mistakes made in Phase N of the project from being repeated in Phase N+1. When I described what a Retrospective was to a few people, they seemed to like the idea ("like a Post Mortem, but focused on improving and learning instead of blaming and arguing"). So we'll see how that flies.

It's certainly odd to be back in a work environment that's so strangely different from what I was used to and yet still experiencing many of the same challenges. Go figure!

Saturday, May 9, 2009

A Horse Of A Different Colour

I'm venturing back into the work force on Monday, but with a twist. After 22 years of full-time employment, this will be my first experience as a contractor. Also, the work I'll be doing looks to be at least somewhat unlike anything I've ever done before.

I'll be performing a review of the development environment of a local company, in order to provide some guidance as they continue to refine their practices and processes. I think that I landed the contract on the strength of a couple factors in particular: my rather far-reaching involvement throughout my last employment as the Agile Manager, as well as the general breadth and depth of my resume, to date.

On that former point, it seemed relevant in the job interview that I had had some recent experience bringing change to an organization. I talked about how much I'd learned in the process, especially regarding the importance of being patient with people as they absorb information and evaluate it through their own personal filters. There's also naturally an element of trust required in something like that. I likened it to the expression about leading a horse to water but not being able to make it drink. My job often seemed to involve repeating that process, over and over again, until the horse finally trusted me enough (or maybe just got thirsty enough!) to take its first sip. (Not that I think of co-workers as horses! It's just an analogy!!)

By the same token, I think that the wide-ranging roles that I've performed over the past two decades also helped get me a foot in this particular door. I've worked on big mainframes and small client/server infrastructures; I've coded procedural algorithms (COBOL, C, Assembly language) as well as Object-Oriented solutions (C++, Java); I've been a "do-er" and a manager ("do-nothing-er"?); I've worked within well-understood roles but at other times had to define my own job. In fact, my resume, when I take the time to actually look at it (usually when I'm updating it), speaks volumes about the fact that I like new challenges. I've tended to change "jobs" (but not employers) at a pretty predictable rate of roughly every two years or so! And that's been true all along, showing that even as a wet-behind-the-ears programmer back in the 80s I was always looking for change as soon as I got comfortable with what I was doing. I think the pattern usually went: a few months of being a newbie, a year or maybe two of competence or better, and then on to the next thing. That cycle has given me a lot of varying experiences to draw on by now, it seems.

At any rate, I'll definitely be learning about a whole new set of parameters, starting next week. I'll be comparing what I see in front of me to my mental backlog of best practices. I'll be discovering what strengths and opportunities are in effect in an environment that's quite different than anywhere I've worked before, and making recommendations on each. And I'll be building up a bunch of new relationships, right from scratch. All of which sounds like something that should be right up my alley, I hope!

Labels:

Change,

Leadership,

Learning,

Trust

Sunday, May 3, 2009

No AgileMan At Agile 2009

I got the official word in the middle of last week that my proposed session for the Agile 2009 conference, "The Real-Life Adventures of AgileMan", didn't get accepted for inclusion in the program. In fact, of the 11 proposals that were made by people within my circle of friends (including my own), only one of them made the cut. Therefore either we're all very inept at writing up interesting session descriptions, or the competition was very fierce this year.

I got the official word in the middle of last week that my proposed session for the Agile 2009 conference, "The Real-Life Adventures of AgileMan", didn't get accepted for inclusion in the program. In fact, of the 11 proposals that were made by people within my circle of friends (including my own), only one of them made the cut. Therefore either we're all very inept at writing up interesting session descriptions, or the competition was very fierce this year.I don't know that my own efforts at "selling" my session idea were all that impressive, but I figured that I'd done OK. For the sake of posterity - and to let the readers of this blog judge for themselves - here's what I had written up:

Overview

What’s really involved in “going Agile”? While every Agile adoption has its own unique challenges and rewards, I’ve written 2 books about my company’s experiences moving from Waterfall to Agile. My focus is always on lessons learned, and we had literally dozens of those by the time we were done! In this session, I’ll be talking about early successes, what surprised us, where we dropped the ball, why role definitions turned out to be so important, where coaching would have helped, and many other similar topics. I think anyone considering a move to Agile would benefit from attending.

Process/Mechanics

In the 2+ years that I spent as my company’s Agile Manager, I was involved with every aspect of our transition to an Agile methodology. I worked closely with the Project Managers, Development Teams, Product Owners, Information Technology and the company’s Executives over that span, and also had several stints as Agile Coach to some of the teams. I’ve written two books (“The Real-Life Adventures of AgileMan (Lessons Learned in Going Agile)” & “More Real-Life Adventures of AgileMan (Year 2: Easier Said Than Done)”) about our experiences, describing our lessons learned so that others might benefit from what we discovered.

Each of my AgileMan books contains 32 chapters, covering a range of topics related to Agile adoption, all told in a conversational tone from the perspective of someone who went through it all first hand. As reflected by the Learning Outcomes for this session, I always regarded our 2-year journey of “going Agile” as having a narrative thread to it. That perspective made it quite easy for me to transform what might have otherwise been fairly dry material into what ended up being a very entertaining series of stories (I’m told). The focus of the books is always firmly on what was learned, even to the point that each chapter ends with a Lessons Learned summary page recapping the key insights that were gained on each particular topic. I believe that the material would therefore lend itself quite naturally to a 90 minute (or longer) interactive PowerPoint presentation in which I recount the key experiences that we had, following the narrative flow from the books, and answer questions from the audience. I’ve done this same sort of thing for various university Computer Science classes here in Ontario and have had great results to date. Obviously the treatment would, by necessity, be somewhat different within an Agile-centric environment like Agile 2009, but I would imagine that anyone attending the conference because they’re considering a move to Agile would find such an in-depth experiential discussion invaluable.

For the conference, I plan to do the following:

- introduce myself in terms of background

- explain why I wrote the two AgileMan books

- provide context for how the material in the books is organized

- spend about 5 minutes on each of a range of topics from my books, including fielding questions from the audience

- the pitfalls that may develop when Agile is mandated down from the top (rather than beginning as a grass roots movement)

- some early signs of trouble (role confusion, under-staffing, shaky legacy code)

- considerations around team composition

- areas where management may send the wrong signals by only “talking the talk” without “walking the walk”

- the challenges involved with promoting a cooperative (Agile) environment while still employing compensation systems that are essentially competitive

- the pros and cons of co-location

- what to look for in a good Agile Coach

- where metrics can do more harm than good

- the role of an Agile Champion

- areas where teams may struggle with providing good estimates

- what can happen when you consistently try to do more with less

- the perils of redefining job titles when not everyone has bought into the new system

- the problem of bug debt

- what it may mean if your team members begin talking about “zone time”

- what a team may look like when it’s sticking to its principles even in the face of opposition

- how to build up trust within the organization, and what can happen if you don’t

Learning Outcomes

- Why we believed that Agile was the right solution to our problem

- How we went about transitioning to Agile

- What the early surprises were

- Where Agile produced the biggest rewards initially

- Which areas of the company struggled the most with Agile

- How Agile was received and perceived by different parts of the company

- What Agile can reveal about an organization’s culture

- What roles proved the most difficult for us to get right

- Where we needed help (and often didn’t seek it out)

- What we might have done differently

While the e-mail notification that I received indicated that I could check my proposal for more feedback on it, there actually wasn't anything there to indicate why it wasn't accepted or what might have made it more attractive.

Oh well... I'm sure it would have been fun to do, and that any attendees in the audience who were either considering an Agile adoption or just starting down the path of one would have gotten a lot out of it. Heck, I wish someone had provided some of the insights that I intended to include in the session back in 2006 when I attended my first Agile conference!

Friday, April 24, 2009

Experimentation Takes Discipline

One of the key principles of any Agile methodology involves "inspecting and adapting." For those who are in the position of Agile Coach or Scrum Master, that sometimes gets represented in the mythical handbook as "encouraging experimentation." I personally think that it's one of the least understood and (perhaps accordingly) most poorly-applied Agile concepts, for a variety of reasons.

For most of us, success is almost always a goal. We want to do well at whatever we take on. And why not? Our performance reviews focus on how successful we were in various aspects of our job; our sense of self-worth is often dependent on how successful we believe others perceive us to be; and it just simply feels much better to "win" than to "lose." Unfortunately, though, experimentation brings with it the ever-present prospect of at least the appearance of failure, especially if one isn't careful about how one approaches such things. For example: if you take a bit of a gamble on something new in the course of your job (or personal life) and can see only two possible outcomes - it either works, or you're screwed! - then it's not hard to imagine that a "bad" result might make you less likely to ever again try something like that in the future. The problem could be that there was simply too much riding on the outcome to justify the risk in the first place, or that you didn't frame the experiment properly. For the latter case, a better setup would have been: "either this works and I have my solution, or I learn something valuable that gets me closer to finding the right answer." As long as learning and moving forward from your new position of greater enlightenment (what some short-sighted folks might indeed call "failure") is an option, then experimentation is a good avenue to go down.

What that implies, however, is that there's at least some degree of discipline involved. Randomly trying things until you find one that works, for example, isn't experimentation. What's required are some parameters that say, right from the start, what you're intending to learn from the experience. Determining whether your current architecture can support the new load that you're considering adding to it is an experiment that you might undertake, but ideally you'd like to get more than simply a "yes/no" answer from it. "Yes" is fine as an outcome (woohoo!), but you'd really like to elaborate on "no" with some results that provide insight into "why not?" and maybe even "where's the problem?" And therefore you'd design your implementation of the experiment with that in mind. Similarly, if you were on an Agile team and were going to try out pair programming as a possible practice, you'd want to establish a way of gathering results that provide you with lots of data. Subjective observations from those involved would be good, but so would some form of productivity measurement that would allow you to see - more objectively - whether pairing up team members causes the team's velocity to go up, down or stay about the same.

The final thought I have on this topic involves the notion of sticking with it long enough to actually get a result. That may sound obvious, but I've lost track of the number of times I've seen people start to try something new and then abandon it partway along. One way to avoid this is to establish, right at the outset, what the duration of the trial will be, as well as how the results will be gathered and measured. Among other things, that sets expectations and can prevent the impression of thrashing that might otherwise form in the minds of those who didn't realize that it was only an experiment in the first place. This could be a team trying something new and making sure that their Product Owner understood that it might "only" lead to learning, or a group of executives trying out a new organizational structure with the intention of re-assessing it after six months to see if it was working.

When I was asked at a recent speaking engagement whether I thought Agile was suitable for industries outside of software development, I mentioned that I thought scientific research, for example, had always been "very Agile." The very nature of good scientific work, after all, is to form a hypothesis and then conceive a test - or series of tests - to either prove or disprove it. The best scientists understand that sometimes you can learn more from a "failed experiment" than you can from one that gives you the results that you expected, and that that's a good thing. But that's only true if you're still paying attention by that point, rather than giving up or banging your head against the wall.

Wednesday, April 15, 2009

The Story Point Workshop

I've been meaning to write up a description of the Story Points Workshop that I designed and delivered in 2007 and 2008, but hadn't gotten around to it... until now. I figure that there's always a chance someone will stumble across this blog and be interested in getting some training on Story Points, and so I really should have something here by way of a sales pitch. If you're reading this and would like to know more, please contact me at AgileMan@sympatico.ca and we can discuss it further.

The workshop that I created is intended to be appropriate for anyone involved in the development of software: programmers, testers, integrators, project/program managers, product owners, team coaches and even executives. In other words, you have to possess at least a basic understanding of the process of software development in order to be able to complete the exercises. Note, however, that you don't have to be of a technical bent, as there's nothing in the workshop that requires you to design, program, or even debug any software.

My goal in providing the workshop has always been to increase the attendees' understanding of, and comfort with, Story Point estimates. At the end of the day, I want everyone who took part to feel confident that they can use Story Points themselves - either as producers of them, or consumers - as part of the release planning process.

To get a large group of people, often with disparate skills and perspectives, all the way to that goal, I use a format that's proven to be both effective and fun (based on the results of feedback surveys that I've done at the conclusion of each session). Basically, the day-long workshop goes as follows:

Part 1: Introduction of Story Points

Here I cover the concepts of relative sizing (as compared to absolute) and what Mike Cohn characterizes as "Estimate size; derive duration." I do this through a combination of slide presentation and interactive exercises, the latter of which allow the group to do some estimating on things not at all related to software (eg. figuratively moving piles of dirt). This 60- to 90-minute section ensures that everyone has a solid foundation of understanding around the conceptual portion of Story Points before we move on to applying that knowledge.

Part 2: Estimating in Story Points

For this part of the day, which takes up the majority of the workshop, I introduce a new application called "the Meal Planner." This is a Java program that I wrote years ago, but the attendees don't really see it or any of the code for it. Instead, what they see is a description of what it is, as well as a set of additional features for it. Because the Meal Planner is a simple and fun concept that anyone can grasp within a few minutes, it provides a perfect backdrop to use in understanding how to Story Point items. No one in attendance has any history with it, and therefore it's unlikely that anyone would dominate the proceedings based on any real or imagined "expertise" with it.

The attendees are then broken up into smaller groups of 5 to 7 people and physically separated (by team) as much as possible. Each team is tasked with coming up with Story Points for each of the items on the Meal Planner's product backlog. I serve as the Product Owner for each group, running back and forth between them in order to answer questions or offer clarifications to the requirements that they've been given. This exercise typically takes between two and three hours to complete, as the teams often get off to very slow starts while they go through the "forming/storming/norming/performing" cycle.

Part 3: Comparing the Results

When all of the teams have produced their Story Points for the complete set of backlog items, I bring everyone back together, post their results up on the walls and encourage each team to review what the others came up with. Then I have a representative from each team briefly go through their rationale for each estimate, allowing the other teams to comment or question them. Sometimes the teams will have arrived at similar estimates; often they'll be quite different. We discuss what sort of factors contributed to those results, and build up a list of "What Worked and What Didn't" that help the attendees understand what sort of things contribute to better estimating versus what works against that goal. I usually will also provide my own Story Point estimates (after everyone else has), based on having written the application. All told, this section usually requires about an hour to get through.

There's a slight variation on the above that I've done in one instance, which proved quite insightful for all involved. I had 2 groups in that case, and so I decided to spend most of my time with one, at the expense of the other. Also, I'd planned it so that the "AgileMan-starved" group got a different set of requirements than the other group, with slightly less details in it. The results of this disparity were striking, to say the least: in many cases, the "starved" team produced estimates that were completely at odds with what both the "better-fed" group came up with as well as the "expert's Story Points" that I provided. I think this sort of thing is probably only appropriate if the workshop is being set up for an environment where the product people are struggling to fulfill their responsibilities (as was the case where I did it).

It's possible to get all of the above done in as little as about four and a half hours, although that's awfully rushed and not very conducive to learning. I prefer to schedule it for either five or six hours, in order to allow for regular breaks and to give people the opportunity to reflect on developments as we go along. Something like a 9:30 start, with lunch brought in, and a wrap-up by 4:00, works best. I've done the workshop with as few as a dozen people, and as many as 25 attendees, and both extremes seem to work fine. With fewer than 10 to 12 people, it's difficult to get 2 groups that can compare results. More than 25 would provide quite a logistical challenge for me to facilitate once the smaller groups are formed.

When I took this workshop on the road to a company in the San Francisco area in late 2007, I did 3 sessions there (one per day) and received an average score of 8.7 (out of 10) in terms of "overall quality." One of the company's Vice-Presidents recently recommended me to a colleague based on those workshops, saying that I had "received the most positive reviews from folks that any trainer has at our site, and we've had quite a few." So I like to think that it left a good impression!

Labels:

Learning,

Project Management,

Training

Friday, April 10, 2009

"My Life As A PM Is Over: The Company Just Went Agile!"

Last night was my presentation on Agile Project Management to the local chapter of the Project Management Institute, and based on the electronic polling results that were collected right after I finished, I think I did fairly well. Each member of the audience was given a handheld device about the size of a small calculator and then asked to respond to a series of statements along the lines of: "The topic presented by tonight's speaker was informative and interesting" and "Tonight's speaker appeared to be well-prepared" by selecting, on their device, either "1" (strongly agree), "2" (agree), "3" (disagree) or "4" (strongly disagree). For those who were having trouble figuring out how to work their new toys (since this was the first time they had tried this approach), I helpfully offered the advice, "Just press the button labeled '1'!" In most cases, I received scores where the total of "1"s and "2"s put me at or above the 80% mark, which was gratifying to see.

Last night was my presentation on Agile Project Management to the local chapter of the Project Management Institute, and based on the electronic polling results that were collected right after I finished, I think I did fairly well. Each member of the audience was given a handheld device about the size of a small calculator and then asked to respond to a series of statements along the lines of: "The topic presented by tonight's speaker was informative and interesting" and "Tonight's speaker appeared to be well-prepared" by selecting, on their device, either "1" (strongly agree), "2" (agree), "3" (disagree) or "4" (strongly disagree). For those who were having trouble figuring out how to work their new toys (since this was the first time they had tried this approach), I helpfully offered the advice, "Just press the button labeled '1'!" In most cases, I received scores where the total of "1"s and "2"s put me at or above the 80% mark, which was gratifying to see.As for the material itself, I had worried that I had too much to cover in 2 hours, but somehow it all worked out, even with a 15-minute break midway through. I spent the first half introducing Agile, and then called for a short break. However, I asked that, during the break, everyone write down how they thought they might react if they went into work on Monday and their boss walked up to them and announced, "Guess what? We're going Agile in a month!" Just before resuming, I collected their responses and read them out for everyone to hear. Here are some representative samples of what I got:

- "Agile? Can we get the Functional Managers to support it?"

- "Good, [but] first educate the clients."

- "Let's go!"

- "Can we still perform accurate Earned Value analysis?"

- "Great, but how do I co-locate with my global team?"

- "1 week cycles??"

- "But what's wrong with RUP?"

- "Great, let's try it. As long as we follow ALL of the steps, not only parts of it."

- "How do we break deliverables into iteration-sized chunks?"

- "Risk of scope creep."

- "How do you budget??"

- "What are the signs that we NEED Agile?"

- "Do Agile? We don't even do Project Management!"

During the Q&A session at the end, many of those same concerns were expressed once again. One woman was sure that no budget would ever be approved without a guarantee that the work done would match what was being approved, to which I said, "If that sort of arrangement is working out for you right now, then I don't know why you'd ever want to move to Agile. The organizations that move to an Agile methodology are the ones where things change considerably between initial concept and final installation." Another fellow was understandably concerned about how to create automated tests to replace the extensive manual testing that they currently do at the end of each project, and I said that that was one area where you'd want to allocate time in order to research the new products and services that are coming out on the market for that purpose. "But in the end," I said, "you really don't have any choice: you need to have automated testing, or you'll never be able to do short cycles." I also got asked whether Agile was really only appropriate for "UI companies", to which I was able to describe some of the very-different types of organizations I've heard of - or seen! - doing Agile.

The moderator eventually had to call an end to the Q&A, as I think it might've gone on for quite a while longer otherwise. I received a $25 Chapters gift card for my troubles (which was nice, and unexpected) and eventually took off home to unwind. Sadly I didn't make any book sales, despite having several copies with me for just that reason. Maybe next time!

Labels:

Book News,

Learning,

Project Management,

Training

Friday, April 3, 2009

On The Technical Debt Topic

Just a quick note to point interested readers toward this very good post on that subject. It's well worth the 5 minutes or so that it'll take you to read.

Wednesday, April 1, 2009

The Project Manager "Problem"

I've been invited to speak at the next scheduled meeting of the local chapter of the Project Management Institute. It was requested that I make my presentation "about Agile" but the rest of the direction was pretty much left to my imagination and questionable discretion. As such, I've come up with material under the banner of:

I've been invited to speak at the next scheduled meeting of the local chapter of the Project Management Institute. It was requested that I make my presentation "about Agile" but the rest of the direction was pretty much left to my imagination and questionable discretion. As such, I've come up with material under the banner of:"My Life As A PM Is Over: The Company Just Went Agile!"

Now, that title is most certainly intended to be attention-grabbing, humourous and perhaps even a little ironic. That latter characteristic stems from the slightly-nebulous view in which some in the Agile community hold Project Managers. At one extreme fringe of perspectives on the topic is the one that says, essentially, "Now that you've gone Agile, you can get rid of all your PMs!" A more moderate stance shows up in some of the Scrum literature, which advocates for having most or all of your Project Managers transition into the Scrum Master role. All the way at the other edge of possibilities lies the approach that I saw undertaken while I was the Agile Manager in my last job: keep the PMs as PMs, and figure out how to make that predictive, deadline-focused paradigm work in an iterative, adaptive environment. (Those who've read my two AgileMan books will recall that, many good intentions notwithstanding, we struggled greatly in integrating those two very different world views.) Even with that attempt to keep things somewhat as they were in the PMO, though, I think all of the people involved would agree that the PM role changed considerably as we moved through our Agile transition. Therefore, in all cases, there's some bitter irony to saying, "My life as a PM is over..."